Designing Trust: Why User Interfaces Matter in Robotics

Introduction

When developing human-robot interfaces, it’s essential for developers to consider the technical knowledge of the end users. Interfaces should be designed to match the user’s skill level, ensuring usability for both experienced operators and those with minimal technical expertise.

Compatibility between the interface, the robot, and the operator is critical. The interface must seamlessly integrate with the robot’s control system while being intuitive and accessible for the operator. A well-designed interface balances technical functionality with ease of use, providing a smooth interaction between humans and robots and ultimately enhancing productivity and safety.

Graphical User Interface (GUI)

Human-robot interaction can be challenging, as effective use of robotic systems often requires a deep understanding of their underlying software and hardware architecture. This complexity arises because the design of robotic interfaces is typically tailored to the technical specifications of the system, which may not be immediately intuitive to all users.

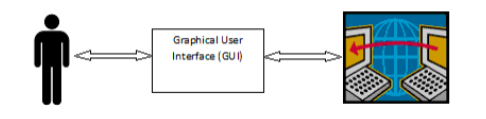

In a graphical user interface (GUI), interaction usually involves a pointing device, such as a mouse or touch screen, which acts as the electronic equivalent of the human hand, facilitating the selection and manipulation of elements on the screen.

In real-world applications, common user interfaces include operating systems like Windows, Apple, Android, and iOS, which offer standardised interaction methods for ease of use.

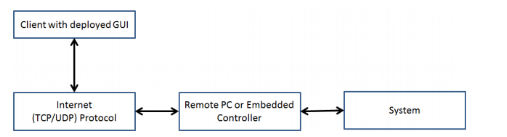

For tele-operated systems, an intranet connection is necessary to transmit and receive data between the user and the robot. In these setups, users can provide input through devices like a keyboard, mouse, or touchscreen. Once the input is received, the system processes it through a structured control hierarchy to perform the desired action. After the action is executed, the UI provides feedback, often visual or auditory, to confirm completion and keep the user informed of the robot’s status. This feedback loop is essential in tele-operated systems to maintain control and ensure accurate interaction with the robotic system.

Human Machine Interface (HMI)

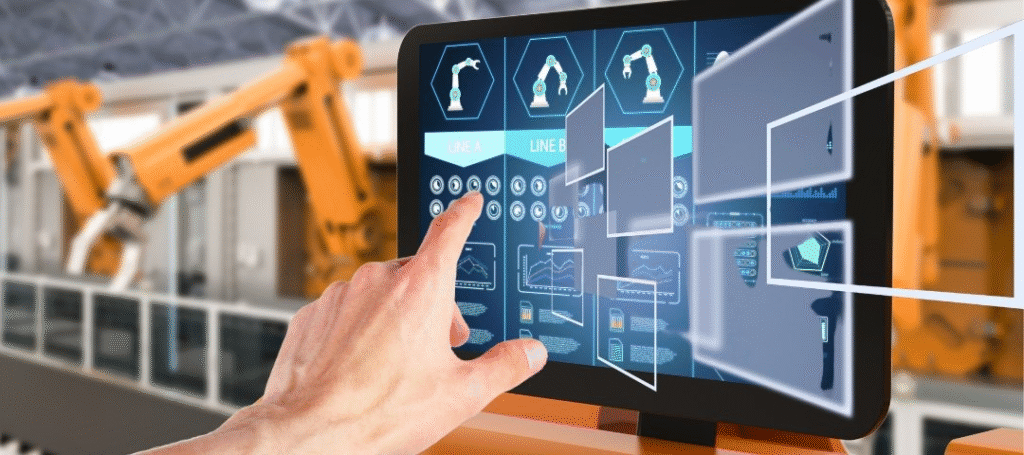

The Human Machine Interface (HMI) is the point where interaction between a human and a machine takes place. In modern industry, Graphical User Interfaces (GUIs) have become the standard for operating robots and other complex machinery. Initially, GUIs were costly and complex to implement, but they have evolved into an accessible and user-friendly norm for human-machine interaction.

From the user’s perspective, the interface is simply the software that enables them to complete tasks in an intuitive manner. Therefore, a well-designed HMI should be easy to learn and use, allowing users to operate machines efficiently without extensive technical knowledge. By offering a clear and functional interface, HMIs empower users to interact with machines in ways that align with their workflows, enhancing productivity and reducing errors in industrial settings.

Command Line interfaces

Command Line Interfaces (CLIs) are a type of Human Machine Interface (HMI) that rely solely on text-based commands and are accessed exclusively via a keyboard. Users interact with the system by typing commands, making CLIs efficient for experienced users who can quickly input instructions without needing a graphical interface. A familiar example of a CLI is MS-DOS.

Touch User Interface

This type of Graphical User Interface (GUI) relies on a touchscreen or touchpad for both input and output. Users interact with the device by touching the screen, which registers commands directly. To confirm entries, this interface often uses haptic feedback, a subtle vibration or pulse that signals a command has been successfully entered.

This type of interface is commonly found in smartphones, photocopier, and other systems where input precision is not critical. Its simplicity and responsiveness make it ideal for applications where ease of use and quick, intuitive interaction are priorities.

Gesture Interface

The gesture interface is an emerging type of user interface that accepts input in the form of hand gestures and mouse gestures. This innovative interface is particularly promising for users with disabilities, as it can interpret natural gestures commonly used in everyday interactions, making technology more accessible and intuitive.

Researchers are actively working to improve the user-friendliness and effectiveness of gesture interfaces, aiming to create systems that respond accurately and seamlessly to a variety of gestures. By enabling users to interact without traditional input devices, gesture interfaces offer a hands-free, accessible approach that has potential applications in assistive technology, virtual reality, and smart environments.

Voice Interface

The voice interface, commonly known as speech recognition technology, enables users to interact with a system by speaking commands, with the system responding through voice prompts. This interface is highly effective as it eliminates the need for physical interaction, allowing users to control devices hands-free, which is especially useful in applications where manual control is not feasible or convenient.

However, voice interfaces face challenges due to the diverse range of accents, dialects, and speech patterns globally, making it difficult for machines to consistently recognize and interpret spoken input accurately. Although it is a relatively new field, ongoing research is advancing its capabilities, improving accuracy, and expanding its applicability in areas such as virtual assistants, automated customer service, and accessibility tools for individuals with disabilities.

For industrial robots, a poorly designed user interface can lead to frequent errors, potentially resulting in catastrophic outcomes such as equipment damage, safety hazards, and production delays. A well-designed interface, on the other hand, simplifies the interaction by handling complex operations in the background. This minimises the user’s need to understand intricate theories, remember specific commands, or implement complicated algorithms, creating a smoother, more intuitive experience.

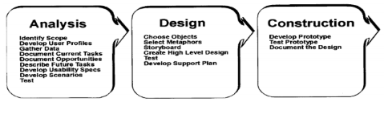

To create an interface that meets users’ expectations and requirements, the development process generally follows three essential phases:

- Analysis: This phase involves understanding the user’s needs, tasks, and workflow to identify what the interface should accomplish. It includes researching user requirements, defining usability goals, and analysing potential challenges.

- Design: Based on the findings from the analysis phase, the design phase involves developing an interface layout and interaction model that prioritises ease of use, accessibility, and efficiency. This includes prototyping, testing with users, and refining based on feedback.

- Construction: In this phase, the interface is built and integrated into the system, ensuring it functions smoothly with the robot’s hardware and software. Developers focus on finalising the interface, testing for errors, and ensuring that it provides the intended experience.

These phases help create a user-centred, intelligent interface that aligns with the user’s workflow, reduces the risk of errors, and improves overall productivity and safety in industrial environments.

Examples of GUI applications

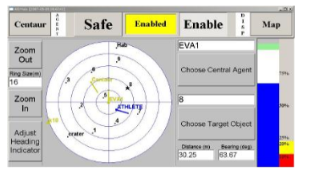

The AIDMap GUI is an interface designed to provide a graphical display of all robots and stable locations in an environment, helping users track and manage robot positions in real time.

Key features of this GUI include:

Object Representation: Objects are displayed as dots labelled with their names, if available, for easy identification.

Heading Indicators: If an object is a robot and has a valid heading, an indicator shows the robot’s orientation, providing directional context.

Map Centering: The centre of the map can be set to either a fixed location or a specific robot, allowing flexibility based on user needs.

Real-Time Position Updates: As robots move, their positions are automatically updated on the map, enabling continuous tracking.

Targeting Information: When a target object is selected, the GUI displays the distance and bearing to the target as text, offering precise navigational data.

In addition to the map display, the AIDMap GUI likely includes the following interfaces to support interaction:

Selection Interface: Allows users to select target objects or robots on the map, triggering the display of distance and bearing information.

Information Display Interface: Text fields that show real-time data such as robot names, headings, distances, and bearings.

Update and Refresh Interface: Ensures that map data remains current, reflecting any movement or changes in object positioning.

The AIDMap GUI enhances situational awareness, making it easier for users to monitor and manage robotic operations accurately within a defined space.

Interfaces like AIDmap often include the following applications:

Task Checklist GUI : This part of the GUI serves as a task display panel that lists tasks to be completed along with their estimated time for completion.

Consumables GUI : The gauge display in this GUI provides a real-time overview of an agent’s consumable resources for both current and future tasks. Limits can be set to notify when levels drop below these limits.

Camera GUI : The Camera GUI provides a live feed from the selected cameras, enhancing user situational awareness by allowing direct visual monitoring of areas or items encountered by the agent. Different cameras can be selected or Pan/tilt functions.

Graphical User Interface for Remote Control of a Robotic Arm

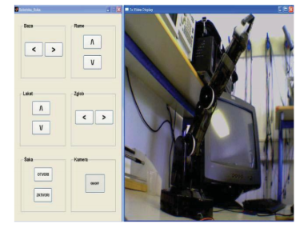

The graphical user interface (GUI) developed by Vajnberger et al. is designed for remote operation of a five-degree-of-freedom (DOF) robotic arm. Although this robotic arm lacks built-in position sensors, the interface compensates with robust visual feedback alongside the control panel, providing a user-friendly experience ideal for remote operation

Developed with MATLAB’s GUIDE toolbox, the GUI enables users to select and control each DOF individually.

By clicking on directional arrows, users can easily adjust the arm’s position. For added protection, contact micro-sensors at each joint prevent overextension by stopping movement once a joint reaches its final position, preventing potential damage to the arm.

The robotic arm uses two communication systems: one between the server and client and another for low-level communication between the arm and computer via RS-232 serial communication, a commonly used protocol for device-to-device communication.

While effective, this interface has several limitations:

System-Specific and Software Requirements: Developed in MATLAB, the interface requires additional software, making it specific to systems with MATLAB installed and needing extra setup before use.

Limited Control Options: The GUI allows only individual joint control, with no option for simultaneous multi-joint operation.

Limited Functionality: The interface lacks a range of control functions, limiting its versatility for different tasks.

Restricted to Single System: Designed specifically for a five DOF robotic arm, the GUI requires redevelopment to operate other systems or robotic configurations.

To improve usability and functionality, it is recommended to develop a more versatile interface with features like joystick control, multi-joint operation, and expanded functionality, making it adaptable to various robotic systems and easier to deploy across different platforms

Graphical User Interface and Sensors

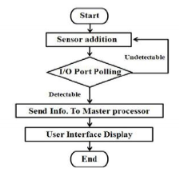

The user interface for robotic manipulators functions similarly to the interface of computer programs. It follows a generalised design algorithm, providing users with controls and feedback to interact with the robot. However, in the case of a robotic manipulator, the interface is often hosted on a remote machine that communicates with the robot via a radio or data link

Sensors play a crucial role in robotic systems, serving as the robot’s “eyes” and “ears.” These include a variety of sensor types, such as laser range finders, shock sensors, temperature sensors, pressure sensors, and GPS, each essential to robotic operation.

In real-time operation, these sensors relay the robot’s conditions to the operator, helping them make informed control decisions. For autonomous robots, sensor data is processed by an onboard computer, allowing the robot to interpret its surroundings and act independently based on the information collected. Sensors are thus fundamental to both remote and autonomous robotic systems, providing critical situational awareness and enhancing responsiveness.

Interested in our Engineering Courses?

At iLearn Engineering®, we offer a diverse range of online accredited engineering courses and qualifications to cater to different academic and career goals. Our courses are available in varying credit values and levels, ranging from 40 credit Engineering Diplomas to a 360 credit International Graduate Diploma.

Short Courses (40 Credits)

A selection of our more popular 40 credit electrical diplomas…

Diploma in Electrical and Electronic Engineering

Diploma in Electrical Technology

Diploma in Renewable Energy (Electrical)

First Year of Undergraduate Electrical (Level 4 – 120 Credits)

Higher International Certificate in Electrical and Electronic Engineering

First Two Years of Undergraduate Electrical (Level 5 – 240 Credits)

Higher International Diploma in Electrical and Electronic Engineering.

Degree equivalent Graduate Diploma Electrical (Level 6 – 360 Credits)

International Graduate Diploma in Electrical and Electronic Engineering

All Electrical and Electronic Courses

You can read more about our selection of accredited online Electrical and Electronic Engineering courses here.

Complete Engineering Course Catalogue (all courses including industrial, mechanical and computer engineering)

Alternatively, you can view all our online engineering courses here.

Recent Posts

Aircraft Basics: Main Components and Standard Control Surfaces Explained

Aircraft Basics: Main Components and Standard Control Surfaces Explained Introduction In this blog we will identify the main components within an aircraft, more from the point of view of large external parts, more specifically, flight control surfaces. Flight control surfaces are simply physical devices that the pilot can control and adjust in order to change […]

Understanding and Calculating Generator Efficiency and Output Parameters

Understanding and Calculating Generator Efficiency and Output Parameters Introduction The performance of a generator is often judged by how efficiently it converts mechanical energy into electrical energy. Understanding and calculating this efficiency, along with other key output parameters such as voltage, current, power factor, and load, is essential for evaluating performance and ensuring reliable operation. […]

Essential Cooling and Protection Devices: How They Work and Why They Matter

Essential Cooling and Protection Devices: How They Work and Why They Matter Introduction Generators produce a significant amount of heat and electrical stress during operation, which can affect performance and lifespan if not properly managed. That’s where cooling and protection devices come in. These essential systems, including fans, radiators, circuit breakers, and relays, work together […]